How to convert a website to LLM-friendly format?

Struggling to get accurate summaries from LLMs when using website content? Learn how SemaReader converts web pages into LLM-friendly formats, improving the quality of AI-generated summaries and reports.

TECHNICAL

11/4/20243 min read

Large language models (LLMs) lack up-to-date information and when they are asked to summarise recent events or generate a report without relevant context, they hallucinate. When working on a project to summarise a company’s web content, we noticed:

Giving little to no context causes the LLMs to hallucinate. They cannot perform the task in the absence of necessary information.

Giving too much context, e.g. the entire website of a company, degrades the generation quality. They ignore chunks, repeat content and slow down significantly.

These problems meant that we needed to extract content from websites in a very focused fashion without relying on the LLMs ability to process raw unstructured text or HTML.

Convert with SemaReader

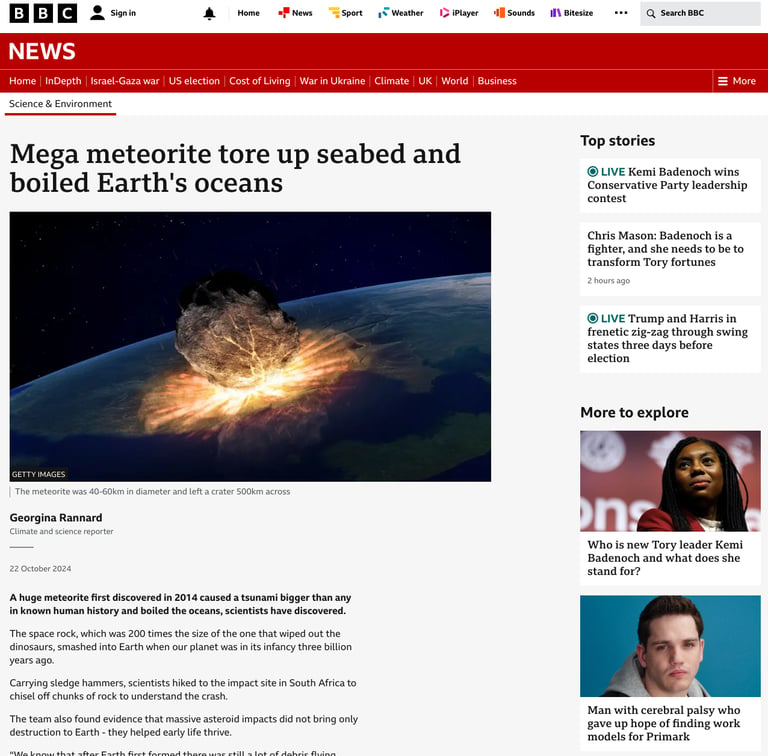

To solve this problem, we designed a new API service called SemaReader which converts a web page by extracting and filtering the content into an LLM-friendly markdown format. It targets what is deemed the main content of the website whilst preserving its structure as much as possible.

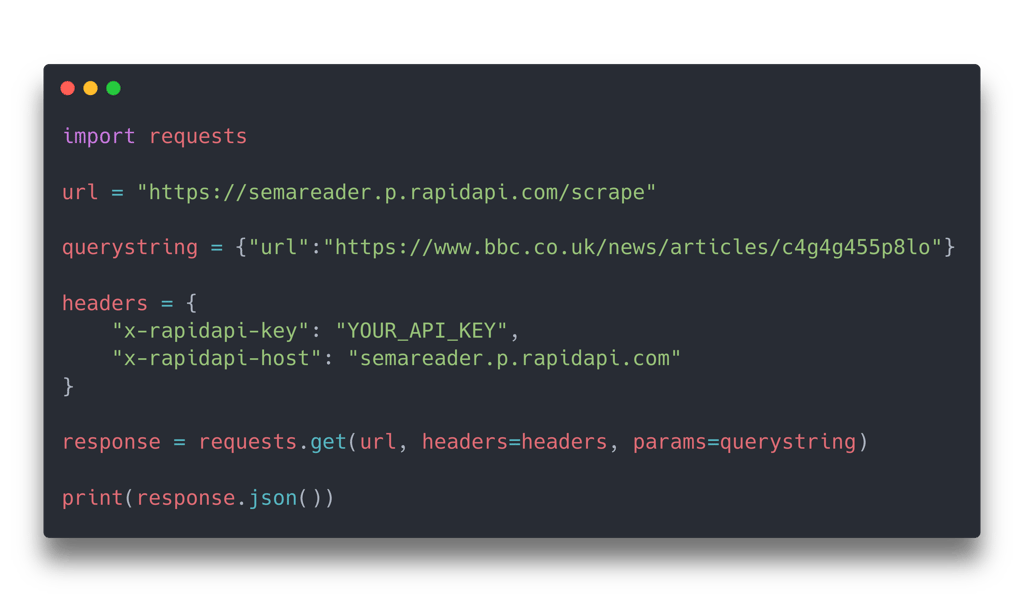

To get started, all you need to do is make a GET request using your favourite programming language at RapidAPI.

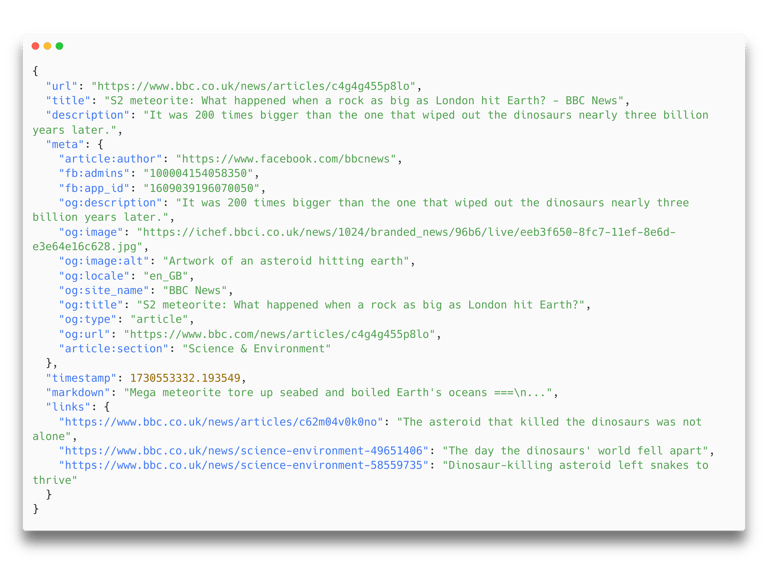

Which will convert a webpage into structured JSON output including the LLM-friendly markdown. You have an example below which also shows the metadata, title and description neatly returned.

How does it work?

While the pre-processing of the HTML just removes unwanted tags like scripts to reduce the size, the main algorithm uses two features:

Content score: This is a learnt score that estimates the quality of content such as a text rich paragraph versus a link only navbar.

Consistency score: The consistency score attempts to balance the structure of the website and acts as a countermeasure to the content score.

The content score attempts to remove content as much as possible that it deems of low value or noise for an LLM while the consistency score aims to maintain the structure. For example, if there is a navigation bar in the middle of two high content paragraphs, then the two scores working together might keep it.

A limitation of SemaReader is that it does not handle Javascript rendering for security reasons. A Javascript rendered web page doesn’t send the HTML content directly but instead uses Javascript to load the data and then render it dynamically. This approach is more common in web applications as opposed to content heavy websites such as news websites and blogs. Similarly, the company website we were working on as part of the project didn’t have single page Javascript rendered pages.

What about long context LLMs?

LLMs are getting increasingly longer context lengths which allow you to put not only a single website but the entire web content of a company in one go. But our experiments and experience showed that the more content you add the quality of the generation goes down, the LLMs we tested:

Ignored large chunks of context when there is too much of it.

Repeated content from similar segments as opposed to using more of the context.

Slowed down in performance significantly due to the large input prompt.

Our current approach is to break down each website individually, and build a response step-by-step. Using SemaReader, we can process one website at a time with a clean input and follow the links to the other pages. In fact, we use an agentic LLM to determine which pages it should follow and read next.

Legal

© 2025 Semafind Limited. All rights reserved.

Company

Semafind Ltd.

36 Bruntsfield Place, Edinburgh, United Kingdom, EH10 4HJ

SC745698 - VAT GB435273890